Benchmarking Low-Light Image Enhancement and Beyond

Abstract

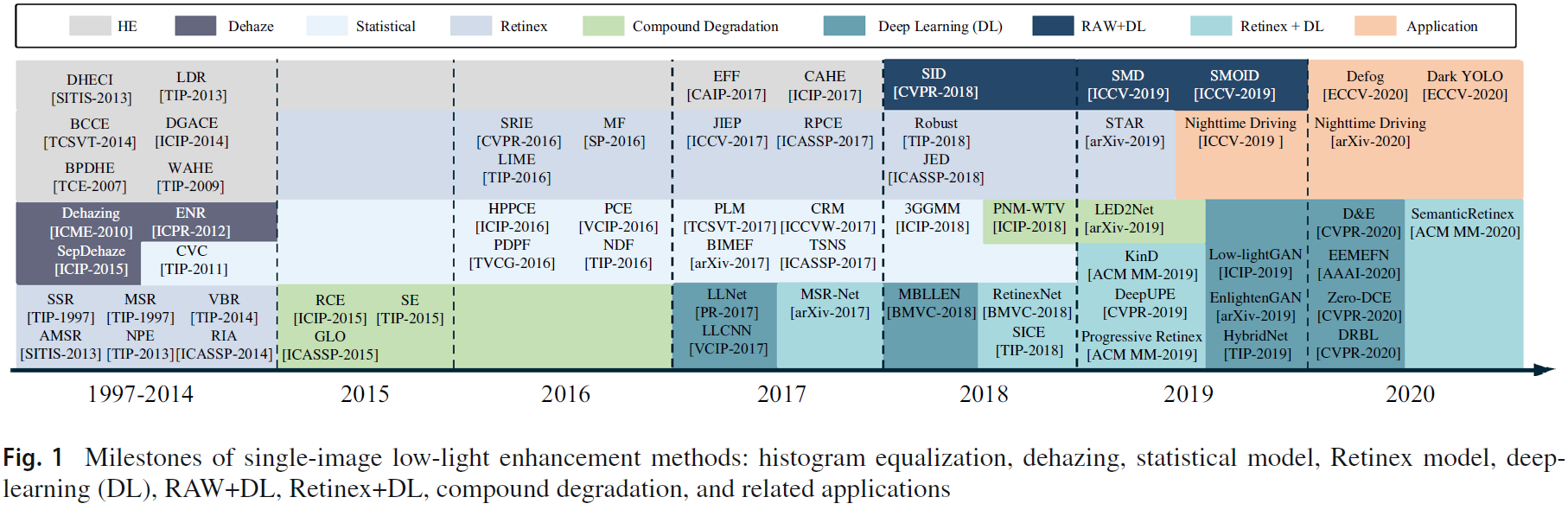

In this paper, we present a systematic review and evaluation of existing single-image low-light enhancement algorithms. Besides the commonly used low-level vision oriented evaluations, we additionally consider measuring machine vision performance in the low-light condition via face detection task to explore the potential of joint optimization of high-level and low-level vision enhancement. To this end, we first propose a large-scale low-light image dataset serving both low/high-level vision with diversified scenes and contents as well as complex degradation in real scenarios, called Vision Enhancement in the LOw-Light condition (VE-LOL). Beyond paired low/normal-light images without annotations, we additionally include the analysis resource related to human, i.e. face images in the low-light condition with annotated face bounding boxes. Then, efforts are made on benchmarking from the perspective of both human and machine visions. A rich variety of criteria is used for the low-level vision evaluation, including full-reference, no-reference, and semantic similarity metrics. We also measure the effects of the low-light enhancement on face detection in the low-light condition. State-of-the-art face detection methods are used in the evaluation. Furthermore, with the rich material of VE-LOL, we explore the novel problem of joint low-light enhancement and face detection. We develop an enhanced face detector to apply low-light enhancement and face detection jointly. The features extracted by the enhancement module are fed to the successive layer with the same resolution of the detection module. Thus, these features are intertwined together to unitedly learn useful information across two phases, i.e. enhancement and detection. Experiments on VE-LOL provide a comparison of state-of-the-art low-light enhancement algorithms, point out their limitations, and suggest promising future directions. Our dataset has supported the Track "Face Detection in Low Light Conditions" of CVPR UG2+ Challenge (2019–2020).Download

- VE-LOL-H: [Dropbox] [Baiduyun] (Extracted Code: 7c8i)

- VE-LOL-L: [Dropbox] [Baiduyun] (Extracted Code: a2x3)

- VE-LOL-L Results: [Dropbox] [Baiduyun] (Extracted Code: 9okw)

- Paper and Supplementary Materiasl: Paper Supplementary Materiasl

Citation

@ARTICLE{ll_benchmark,

author={Liu, Jiaying and Dejia, Xu and Yang, Wenhan and Fan, Minhao and Huang, Haofeng},

journal={International Journal of Computer Vision},

title={Benchmarking Low-Light Image Enhancement and Beyond},

year={2021},

volume={129},

number={},

pages={1153–1184},

doi={10.1007/s11263-020-01418-8}

}

@ARTICLE{poor_visibility_benchmark,

author={Yang, Wenhan and Yuan, Ye and Ren, Wenqi and Liu, Jiaying and Scheirer, Walter J. and Wang, Zhangyang and Zhang, and et al.},

journal={IEEE Transactions on Image Processing},

title={Advancing Image Understanding in Poor Visibility Environments: A Collective Benchmark Study},

year={2020},

volume={29},

number={},

pages={5737-5752},

doi={10.1109/TIP.2020.2981922}

}

@inproceedings{Chen2018Retinex,

title={Deep Retinex Decomposition for Low-Light Enhancement},

author={Wenjing Wang, Chen Wei, Wenhan Yang, Jiaying Liu},

booktitle={British Machine Vision Conference},

year={2018},

}

Contact

For questions and result submission, please contact Wenhan Yang at yangwenhan@pku.edu.com

The website codes are borrowed from WIDER FACE Website.